Ron Swanson famously said, "I know what I'm about, son." It's the kind of unwavering confidence we wish we all had. But when it comes to large language models (LLMs) like ChatGPT, Claude, or Gemini, the reality is quite different. These systems don't know anything, at least not in the way Ron knows he wants all the bacon and eggs they have.

So how do they generate those impressively coherent responses? One word at a time, through probability.

In previous posts, we explained how language models understand text through vector embeddings and use that understanding to power features like semantic search. Now let's look at the other side: how these models create new text.

Autocomplete, but way more sophisticated

At its core, an LLM is a next-token predictor. Given a sequence of words (or more precisely, tokens), the model calculates a probability for every possible word that could come next. Then it picks one.

Think of it like the autocomplete on your phone, except instead of suggesting three words based on your recent texts, an LLM considers patterns learned from vast amounts of text to rank tens of thousands of possibilities.

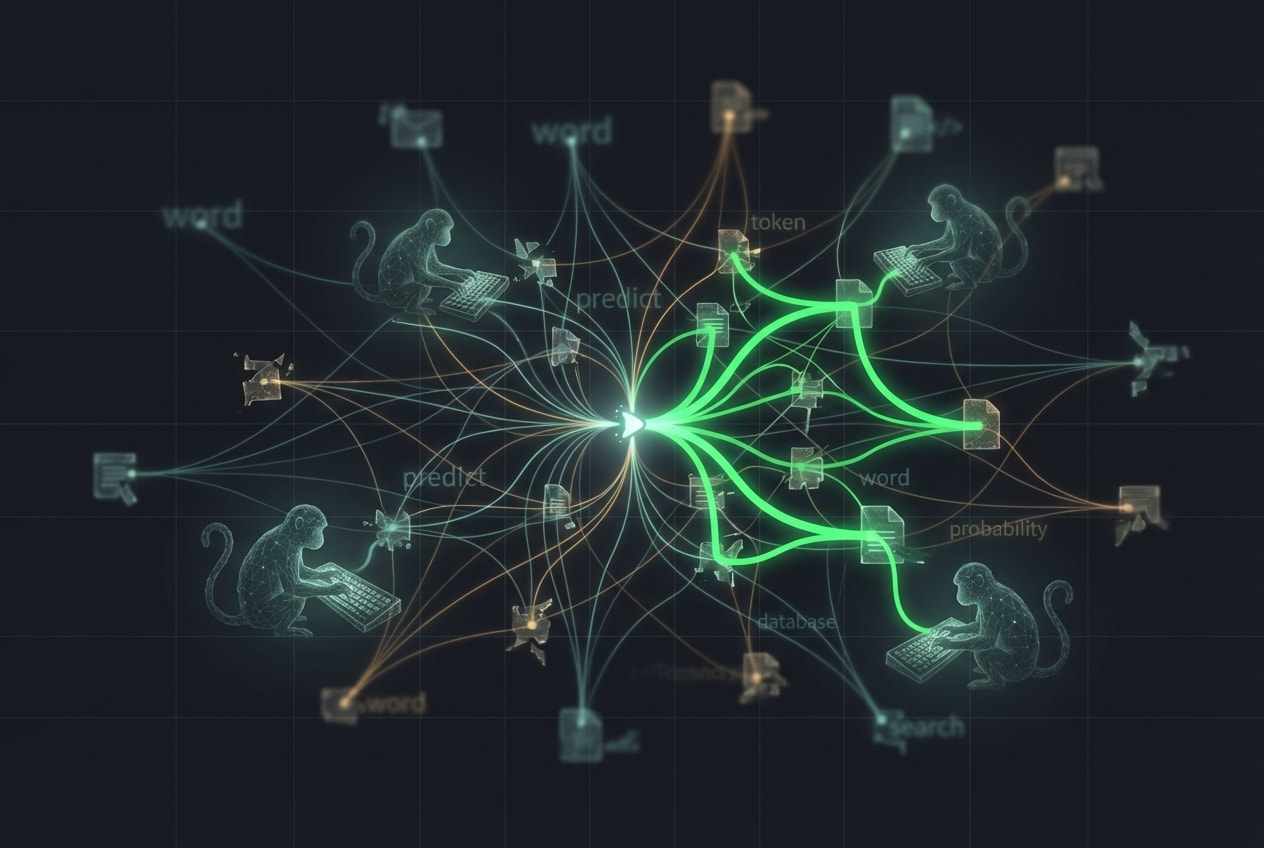

The animation below walks through this process step by step:

Once a token is selected, it gets added to the sequence, and the whole process repeats. The model reads the updated sequence, recalculates probabilities, samples a token, and continues. This happens hundreds of times per second until the response is complete.

Settings that shape the output

You may have noticed two settings in the animation: temperature and top-K. These are controls that influence how "creative" or "predictable" the model's output will be.

Temperature adjusts how much weight the model gives to less likely options. A low temperature makes the model conservative, almost always picking the highest-probability token. A high temperature flattens the probabilities, making surprising choices more likely.

Top-K filtering narrows the field before sampling. If top-K is set to 5, the model only considers the five most likely tokens and ignores the rest, no matter how high the temperature is set.

When you use a chatbot and notice it sometimes gives slightly different answers to the same question, this is why. The sampling process introduces variability by design.

What this means for cities

Understanding token prediction helps demystify AI tools. When a chatbot gives you an answer, it's not pulling from a database of facts. It's generating text based on patterns, which means it can produce responses that sound authoritative but aren't necessarily correct.

This is the foundation of what we'll explore in the next post: why LLMs sometimes "hallucinate" information that simply isn't true, and what you can do about it.

For now, the next time you watch ChatGPT typing out a response, you'll know what's happening behind the scenes: probability calculations and dice rolls, repeated faster than you can blink.